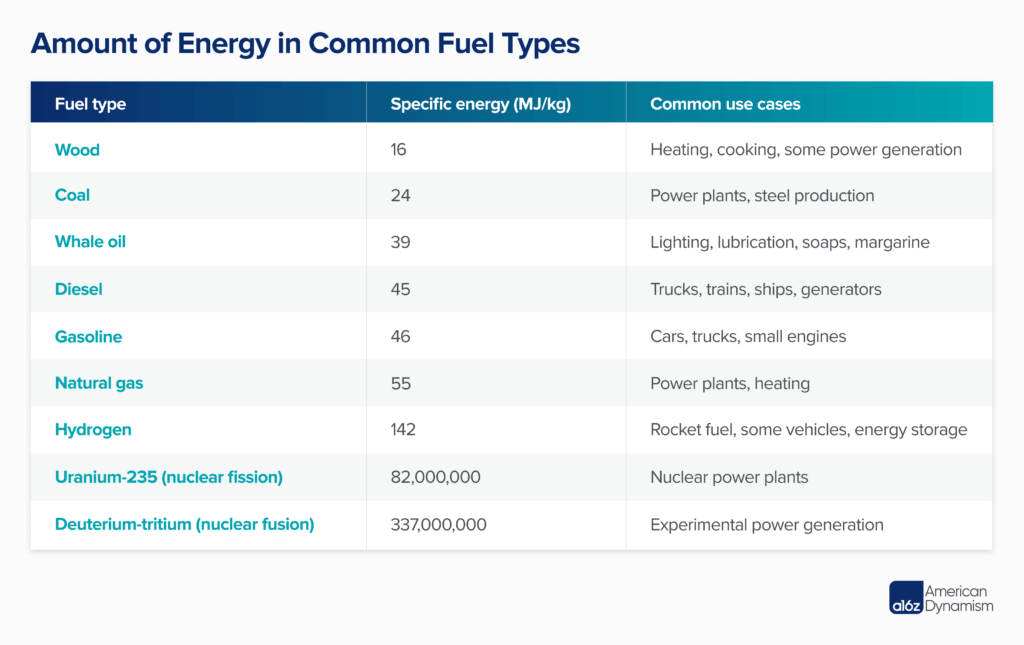

Nearly all of our electricity is generated from heat powering a steam turbine. Historically, this energy conversion has been achieved through burning wood or fossil fuels. However, while these sorts of reactions release a large amount of chemical energy, they are nothing compared to the energy of a nuclear reaction.

Here is the specific energy, or the amount of energy (megajoules) per kilogram, in common fuel types. As you can see, nuclear reactions generate an enormous amount of it—nearly 1.8 million times the energy from burning gasoline.

Effectively harnessing nuclear energy does require sophisticated engineering in the form of power plants, but, once online, nothing can beat them in terms of efficiency— a nuclear power plant can generate over 57,000 MWh/acre compared to solar at 200 MWh/acre, or a natural gas plant at 1,000 MWh/acre. For further perspective, a single reactor can power over 1 million homes with over 90% uptime. In fact, our existing, and largely paid off, nuclear fleet also produces the cheapest baseload electrical power at around $30/MWh. And because nuclear energy doesn’t involve hydrocarbon combustion, it generates power without producing carbon dioxide emissions.

However, critics often argue that nuclear power is unsafe or unsustainable, citing fears about potential accidents and the accumulation of nuclear waste. These concerns are largely unfounded and reveal a misunderstanding about advancements in nuclear technology. Nonetheless, they have resulted in a state of affairs where new nuclear reactors are exceedingly rare in the United States and, thanks largely to onerous regulations, typically take many years and cost billions of dollars to complete.

For example: The reactor Vogtle 3 recently went online in Georgia, becoming the first newly built nuclear power plant to generate power in the United States since 1996. The project also took 7 years longer than expected and is estimated to cost more than $30 billion, once its sister reactor, Vogtle 4, is fully online—more than twice the original projection.

The baggage around nuclear power largely stems from an inaccurate, almost mystical notion of how it works. In reality, contemporary nuclear power plants boast exceptional safety records and produce astonishingly minimal waste relative to their immense energy output. Additionally, their compact footprint allows for versatile placement: They don’t require areas with ample sun or wind, are dispatchable when needed, and realistically could be placed on-site at particularly important facilities as a steady supply of clean power.

But if we’re going to normalize nuclear power as a reliable and well-understood energy source, it’s essential to understand how we’ve ended up in our current situation. It’s also important to recognize that although much of this post focuses on large-scale nuclear fission reactors—because that’s what have been delivering civilian power for the past several decades—smaller, more modular reactors will likely play a major role going forward, perhaps as a means to address more local, and even hyper-local, energy needs.

The current state of affairs: Lots of nuclear, (almost) none of it new

The United States actually gets about 20% of its electricity from nuclear fission, and we operate around 20% of the world’s approximately 450 reactors. You might think Japan or France, or even China, are beating us in reactor count; nope, the United States is the largest nuclear power. In fact, we generate nearly double the amount of energy from our fleet than even France, which gets up to 70% of its electricity from fission. We’ve also gotten very good at operating reactors without incident, increasing our capacity factor, or uptime, to more than 90% (France is only around 75%).

The problem: We largely stopped building new reactors in the 1980s. Today, our nuclear fleet is among the oldest in the world, with an average reactor age of approximately 40 years. There are complex reasons for this, but at a high level, the success of nuclear power is much more about project management, financing, and policy than it is cutting-edge engineering or safety. We already know how to build plants and safely operate reactors; we just need to get better at reducing construction hurdles and executing on new reactor deployment.

Our nuclear rollout peaked in the 1970s during the oil crisis—President Nixon championed “Project Independence,” which aimed to build 1,000 nuclear reactors by 2000. Interest in nuclear energy was at an all-time high; even France was onboard with their similar “Messmer Plan.” However, while France went through with its plan, the United States did not.

The “Whoops” disaster is a good starting point to understand what happened. Washington Public Power Supply System (WPPSS) began construction on five nuclear reactors in 1973. Though these reactors were similar designs, they were not standardized. Different construction crews put together different parts from different suppliers. Naturally, this led to dramatic cost overruns and delays, and only one unit was ever completed. The steelman argument is that WPPSS wanted to figure out which one worked best, but they never even got that far.

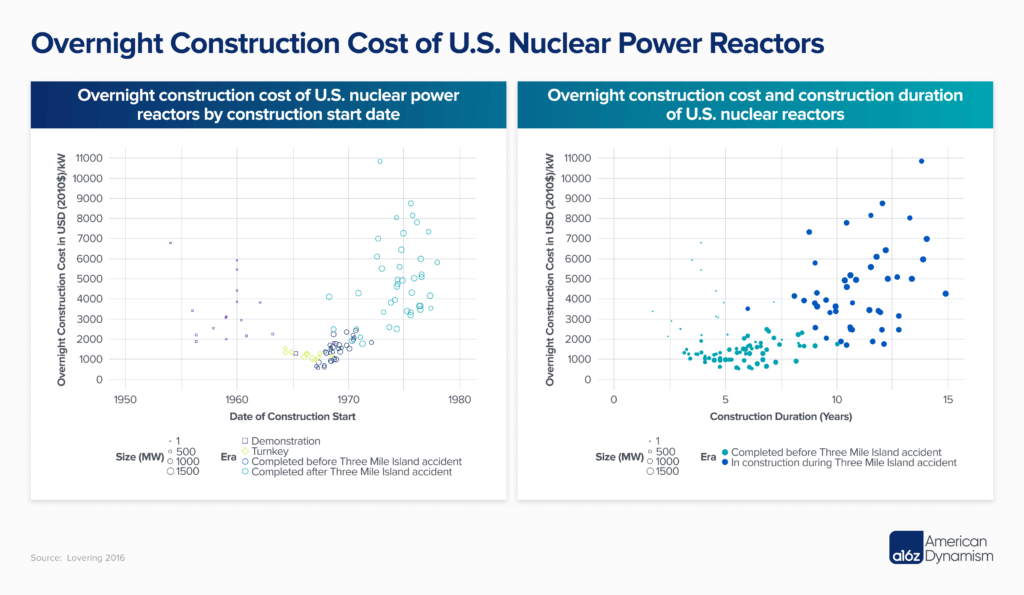

And then, in the 1980s, the overnight cost (i.e., the cost to build “over a single night,” or ignoring interest rates) and the time to build new plants increased dramatically. In 1979, we had Three Mile Island, and in 1986, there was Chernobyl—this was a rocky time for public perception of nuclear power. Naturally, environmentalists and regulators stepped in.

Author and engineer Brian Potter has an excellent essay digging into this issue, but there are a few key points I’ll focus on.

If you were to break down the price of building a new reactor, you’d recognize that indirect costs—that is, for services and financing, not materials—make up the largest proportion. From the 1980s onward, the number of expensive personnel, like engineers, inspectors, managers, and consultants, was overhauled to meet rising regulatory scrutiny. Moreover, regulators would often force design changes mid-construction to meet a newly passed standard, like protecting reactors from potential 9/11-like aircraft impacts.

Whatever the case, in the United States today, new-build nuclear power plants cost over $10,000 per kilowatt. The Department of Energy estimates this rate will need to drop to around $3,600 per kilowatt before nuclear power can really scale, so for a 1 gigawatt plant, we’d want to aim for about $3.6 billion in construction costs compared with the current cost of about $10 billion. Furthermore, potential customers, like utilities, are unlikely to invest in nuclear reactors if they’re continually over budget, delayed, or entirely abandoned.

A strict regulatory environment is a core reason why first-of-a-kind reactor construction is very expensive, but there are other areas of improvement that are within a developer’s control. For example, Westinghouse’s miscalculation with prefabricated part timelines, inexperienced construction crews, and misjudging known regulatory hurdles led to Vogtle 3’s pyrrhic victory. They weren’t even done with the design before they started building, leading to inevitable changes after long lead-time items were already in development.

The tides are turning in favor of nuclear energy

But after decades of stagnation, there’s good news.

First, future reactors don’t need to be this expensive. The Department of Energy identifies experience building the same reactor design as being a core area for cost reductions, largely from declining labor costs associated with better planning. We should certainly encourage EPC firms that worked on Vogtle to share expertise or align themselves with new project proposals. Learning curves do exist in nuclear power, we just need to be smarter about achieving them (and it seems like the next AP1000 under construction, the same model reactor and also built at Vogtle, is on that path).

We know it’s possible at a massive scale, too. Palo Verde Nuclear Power Station, our nation’s largest power plant, with generating capacity at nearly 4 GW, was built in the middle of the Arizona desert using treated sewage water for cooling. What’s more, construction occurred during the Three Mile Island incident in 1979, and it still was completed in 12 years for around $12 billion in today’s dollars, putting it at around $3000/kW. We absolutely can build nuclear plants cheaply. A tight partnership between regulators, reactor designers, and EPC firms directly led to the success of Palo Verde.

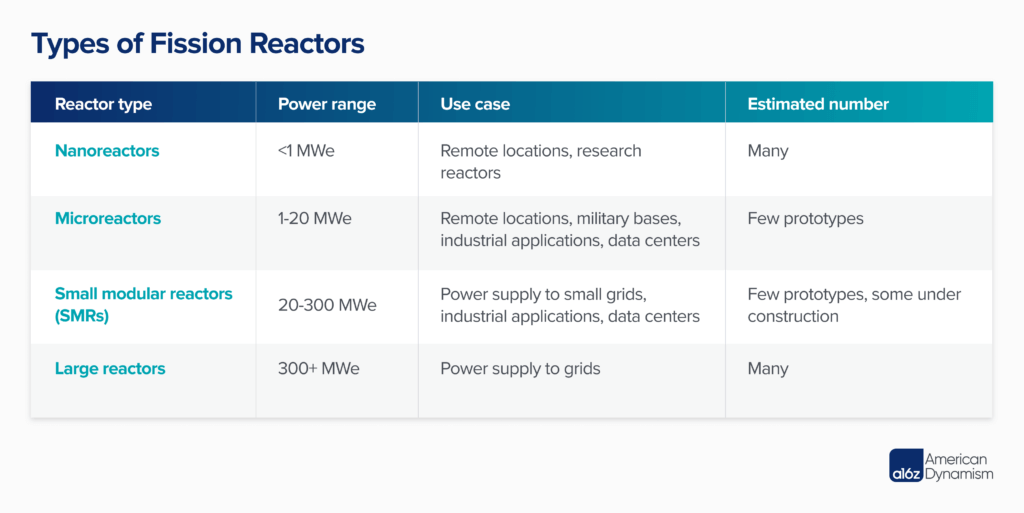

Depending on the size of the reactor, different use cases make sense (see examples below) —some of which can be constructed much more quickly and cost-effectively than the traditional large reactors we normally think of.

While the table above includes nanoreactors and microreactors, there does not appear to be a unanimous definition here. Some people use the term “SMR” to describe anything under 300 MWe, but it’s arguably more useful to draw some distinctions. Conceptually, you can think of SMRs as small power plants with mass-manufactured parts, whereas microreactors have the additional benefit of being small enough to be carried by a truck. For their part, nanoreactors are mostly for research purposes today. In fact, many top universities, like MIT and North Carolina State, have tiny nuclear reactors on campus.

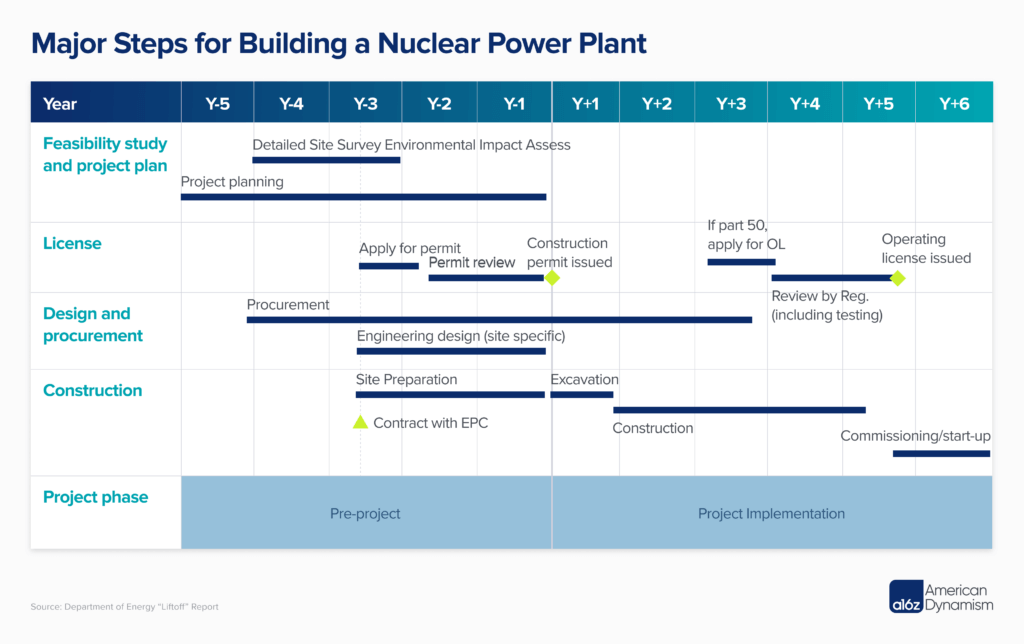

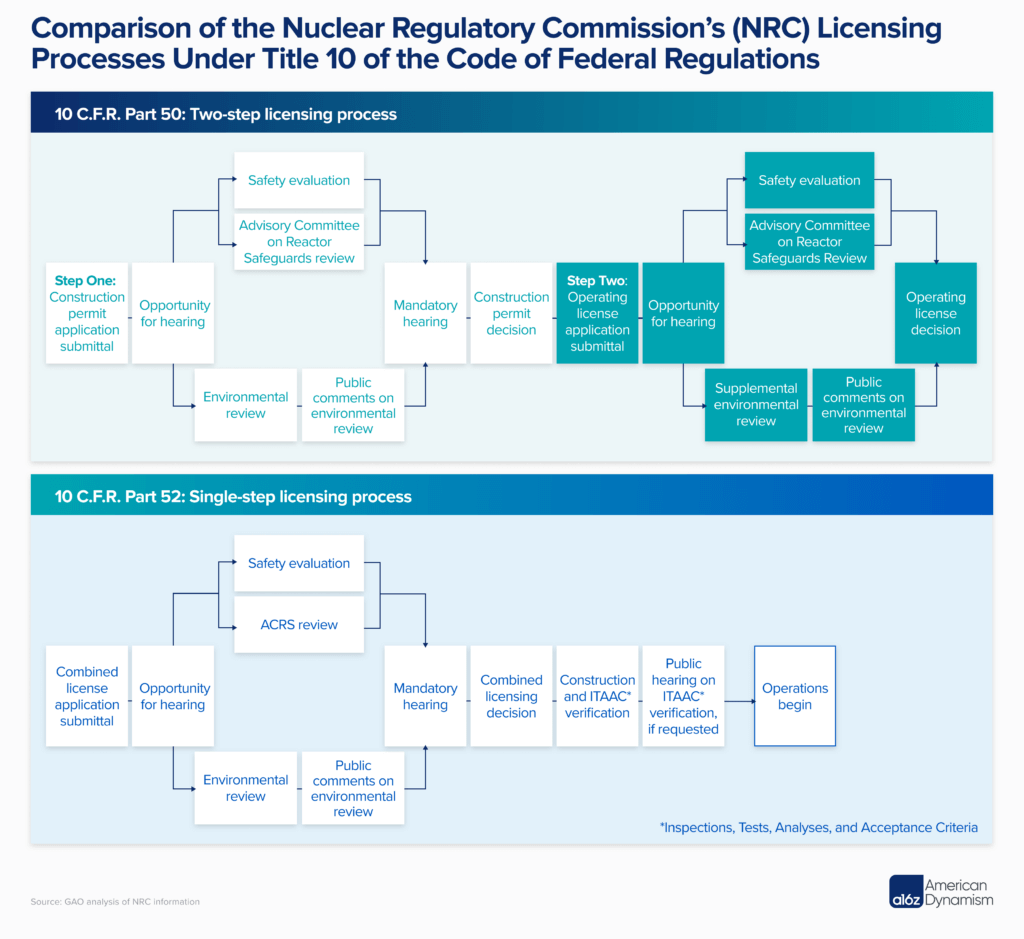

Over the years, the Nuclear Regulatory Commission (NRC), the body overseeing our nation’s civilian nuclear industry, has developed a series of frameworks to address the evolving landscape of the nuclear industry. Part 50 represents the traditional set of regulations, primarily formulated for the licensing, construction, and operation of the earlier generations of commercial nuclear power plants. As the industry advanced and newer reactor designs emerged, the NRC recognized the need for a more streamlined process.

This led to Part 52, which introduced the concept of combined licenses for both construction and operation in 1989. Moreover, it employed “design certifications,” an approach allowing multiple plant applications to reference a single certified design, thus minimizing redundancy and accelerating the approval process. Cynically, this combined licensing presents challenges when changes do need to be made to the underlying design, like when the NRC required Westinghouse to update the AP1000. This directly led to project delays. Today, reactors can choose whether to be licensed under Part 50 or Part 52.

You can see a visualization of these processes below:

To make way for advanced reactors, the NRC saw the necessity for an even more adaptable and modernized regulatory approach. Enter Part 53, a set of regulations tailored specifically for the licensing of advanced nuclear reactors. Part 53 is still in the drafting period and is expected to be complete in 2025. However, it remains unclear if this new set of regulations will result in a truly more streamlined approach.

The NRC is also attempting to speed up the review process for new reactors by increasing licensing review staff. (Although, the company going through the approval process also pays an hourly rate to review staff, which seems…misaligned.) Outside of the NRC, the DoE are strong supporters of advanced nuclear power and, as explained further below, can actually accelerate certain reactor designs that aren’t (yet) used to generate energy.

Perhaps most importantly, though, public sentiment toward nuclear energy is evolving as more people realize its potential to provide carbon-free, reliable energy—even Governor Gavin Newsom in progressive California provided a loan to extend the Diablo Canyon Nuclear Power Plant. Yes, the NRC adding regulatory hurdles mid-project will always lead to cost overruns and project delays, but many of those hurdles stem from public concern that appears to be waning.

What about safety?

While sentiment is shifting in favor of nuclear energy, it’s not out of the woods yet. Many people still have fears around safety and, whatever their veracity, where there are safety concerns, there are regulations. However, nuclear power plants are much safer than many people realize (despite what you might’ve seen on The Simpsons). Below, we dispel two major myths.

Radiation and nuclear waste

When it comes to nuclear power plant radiation, the ALARA (As Low as “Reasonably” Achievable) framework insists on reducing nearly all possible radiation leakage (there is, however, no clear definition of what reasonable actually means). Underlying this is the Linear No-Threshold model, which assumes there is no safe dose of radiation. The problem is that limiting any chance of exposure requires excessive amounts of engineering considerations, and there is significant evidence against the idea that there is no safe amount of radiation.

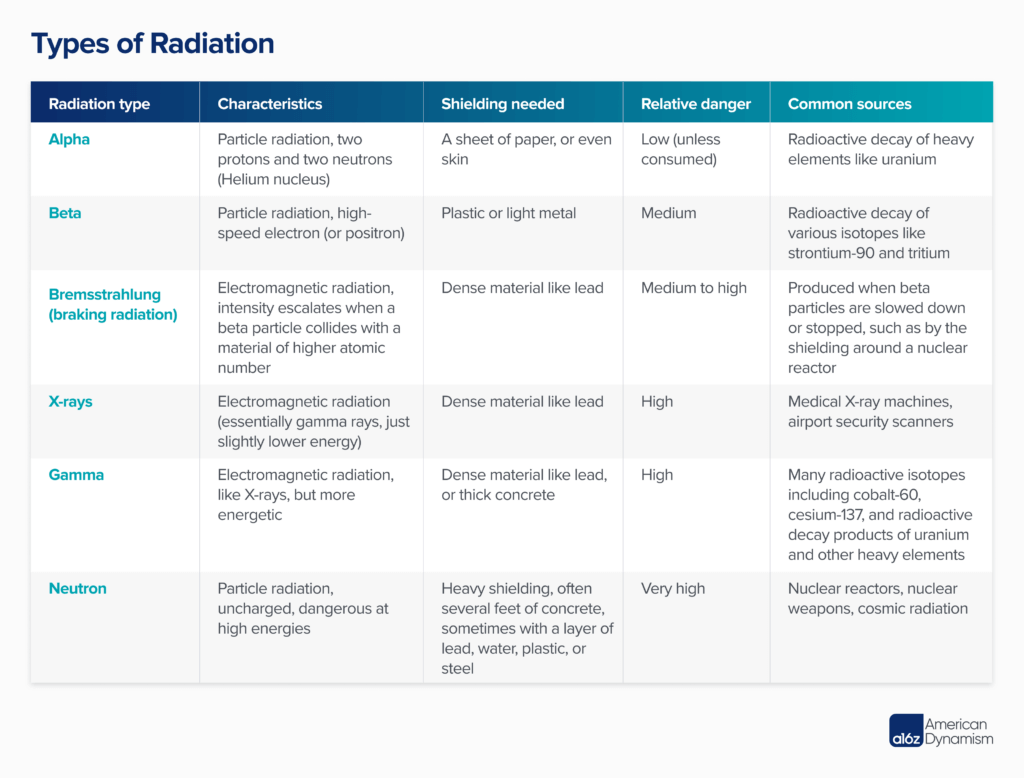

“Safety” reasoning like this does not exist in other sectors where humans also might be exposed to higher levels of radiation, such as simply building your home at a high altitude or flying on a plane. Radiation is everywhere; even our bodies emit low levels of gamma radiation. Reactor designers understand the types of radiation that fission reactions produce, and already go to great lengths to limit meaningful leakage.

There are several types of ionizing radiation that present different safety challenges when it comes to nuclear reactions.

Many people are also concerned about what to do with the radioactive waste that’s generated by fission plants. This deserves a little more explanation, because it’s an issue that can kill nuclear projects before they even begin. High-level, or particularly troublesome, nuclear waste contains two categories of fission products.

The first includes the relatively short-lived fission products, including strontium-90, cesium-137, and iodine-131. There is also cobalt-60, the product of a stray neutron colliding with cobalt-59 (via neutron activation) that is found within the special steel used in many reactor walls. Cobalt-60 has a short half-life of approximately 5 years, but emits powerful gamma radiation. Collectively, fission radioisotope products undergo radioactive decay, but the radiation can be easily managed for their relatively short radioactive periods (and can make great batteries).

The second, more controversial, type of fission products are transuranic (or heavier than uranium) elements that are leftover in spent fuel. A fuel’s “burnup” determines the amount of fuel consumed, with higher burnup indicating greater fuel efficiency. However, changes in burnup can also lead to changes in waste composition, including the levels of transuranic elements. The formation of transuranic waste occurs when some uranium nuclei absorb a neutron but do not split, yielding a heavier, unstable element. This process is influenced by specific conditions within the reactor, but at a high level, is a matter of probability relating to the fundamentals of nuclear reactions. Resulting products include radioisotopes of elements like plutonium, americium, and curium that have half-lives of thousands of years or more (though most of this radiation is released in the first couple of hundred years.)

However sinister this sounds, the reality is that we know how to shield from radiation, and the amount of this waste is not a significant concern, particularly relative to its immense power output. A 1 gigawatt nuclear power plant, capable of powering over 1 million homes annually, uses approximately 25 metric tons of fuel per year (uranium is dense; this is around 600 gallons in volume). Of this, only around 3% is converted into fission products, with the majority of the remaining spent fuel being directly recyclable (further discussed below). In contrast, a coal plant with equivalent output consumes over 3 million metric tons of coal annually, emitting over 13 billion pounds of CO2 in the process.

What to do with long-lived transuranic waste is indeed an important question, but storing it today is largely an over-exaggerated issue. Nearly all waste at American nuclear plants is stored on-site or nearby, first in pools, and then in dry casks—and these are perfectly safe to be around (again: we know how to shield against radiation). There is a small amount of this waste, and nuclear plants already have the space, security, and safety systems to readily handle it.

While there have been attempts at a centralized storage facility, like Yucca Mountain in Nevada, no such place exists today in the United States. The reason Yucca Mountain hasn’t been utilized stems from a combination of factors, notably political opposition, legal challenges, and the ever-pervasive “Not In My Back Yard” (NIMBY) sentiment. Despite the site being deemed scientifically sound for waste storage, these obstacles have prevented its operation. In a more practical sense, some even view it as unnecessary at this time given how straightforward storing waste on-site actually is. Long term, however, scaling domestic nuclear power will likely require a more centralized solution.

Many European nations are ahead of the U.S. when it comes to nuclear “waste.” France processes spent fuel to extract the remaining uranium-238, uranium-235, and plutonium-239, then vitrifies (i.e., turns it into glass) and stores what’s left over; this type of spent fuel recycling is known as a closed fuel cycle, and it relies upon the use of MOX as fissile fuel, which contains a blend of uranium and plutonium extracted from “waste.” And across Europe, there are even efforts to keep spent fuel deep underground. Perhaps most excitingly, there are known methods to transmute fission products or transuranics into less troublesome radioisotopes, breed new fuel, or even burn waste directly using fast fission. There are also fuel cycles in development using thorium-232 as fertile material to breed fissile uranium-233 within the reactor, instead of starting with uranium-235. All or some of these solutions will be increasingly compelling as the United States builds more nuclear power plants.

Nuclear meltdowns

Another popular fear is that of a nuclear meltdown, like what happened at Chernobyl (sort of). A meltdown can occur when a reactor’s cooling system fails to maintain the fuel rods at safe temperatures, leading to the fuel melting. In pressurized water reactors (PWRs), the primary coolant (water) is kept under high pressure to prevent it from boiling. If this pressure is lost or if there’s insufficient cooling, the water can turn to steam, reducing its cooling efficiency. This can cause the confinement materials to melt and explode if pressurized systems burst. It is this combination of events that can lead to radioactive fuel breaching shielding structures and being exposed (not good).

However, modern reactor designs have inherent passive safety mechanisms that do not depend on externally powered coolant systems that could fail in a disaster. For example, modern designs use the natural circulation of fluids instead of externally-powered pumps. The Westinghouse AP1000, one of the most modern PWR designs in service, is assessed to be 100 times safer than previous iterations that were already incredibly resilient. And newer reactor types, some of which should be able to achieve NRC approval in the near future, use different fuels, leverage powerful software to eliminate human error, and have even more advanced passive safety mechanisms to prevent meltdowns.

Radiant, for example, is building a gas-cooled reactor that utilizes TRISO fuel pellets. These pellets encapsulate each particle of fuel, effectively trapping the fission products and making spent fuel movement easier. Moreover, Radiant’s reactor uses helium as its coolant. As a chemically inert gas, helium mitigates many of the risks associated with water-based coolants, like corrosion or boiling. Furthermore, due to its low neutron absorption, helium avoids some of the radiological challenges that must be accounted for in other reactors. It can also get very hot, and thus very energetically efficient.

How nuclear progress is unfolding

Despite the relative safety of modern nuclear reactors and waste-management techniques, we likely won’t see the levels of nuclear energy we need until a couple of major changes happen.

One is that the NRC approval process, and access to highly-enriched uranium fuel, becomes more efficient, thus, making it more appealing for companies looking to make the significant investment required for meaningful advances. The good news on this front, as noted above, is that the agency actually appears to be trying to streamline and expedite the approval process for advanced reactor designs, and is aware of the industry’s concerns about fuel and mid-construction changes.

At present, there’s also a catch-22 situation: The NRC requires data to determine a reactor’s safety before granting approval, yet one cannot operate a reactor to gather this data without said approval. While fixing this process is an ongoing effort, startups aren’t just sitting on their heels waiting. Some U.S. startups are opting to build their first reactors outside of the United States, in countries with less regulation and a more dire need for energy innovation. Others might begin life inside a university or national lab as small reactors where, especially if they’re not being used to generate energy, different paths to approval present themselves. If initial test reactors are at a government lab, for example, there is the possibility of going through the DoE for initial testing approval rather than the NRC. Naturally, the DoE is more progressive when it comes to new designs, and these early tests under DoE jurisdiction accelerate NRC approval.

Another method is to go through the military. The United States Navy, in particular, operates hundreds of small reactors and has been building them for decades. For things like aircraft carriers and submarines, the Navy values the strategic advantage of having a longer operational window without needing to go to port (in fact, it would probably be cheaper to run them on fossil fuels). When it comes to bases, most of the military uses diesel, which, by the time it reaches far away locations, can have an internalized cost of hundreds of dollars a gallon. Nuclear reactors minimize supply chain issues and offer energy security (and, of course, the military knows how to transport radioactive material). Importantly, the military is also able to tear through regulatory red tape when it comes to national security-related projects, potentially reducing the time it takes to turn the reactor on and generate data, and, thus, receive NRC approval for civil power generation.

Ultimately, though, what matters most is the commercial viability of new reactors: Even if they’re approved, will someone buy them? There are strong economies of scale when it comes to large nuclear power plants, so competing directly in the wholesale markets for grid power can be difficult until newer firms can drive down unit costs.

The strategy for many startups is to build smaller plants first, taking advantage of more scalable manufacturing practices to iterate and drive down costs. This is the core principle behind the rise of SMRs and microreactors. These smaller projects might be underwritten without ridiculously high financing costs, making them far more appealing to utilities and other buyers considering investment in new power-generating assets. Typically, these generation IV reactors run at higher temperatures with more advanced fuels to optimize for power output, fuel efficiency, and safety, without increasing size or costs.

Beyond the military, there are commercial use cases that demand similar levels of power reliability. For example, many factories, industrial parks, and data centers seek a stable supply of clean power at a reasonable price. In fact, many firms are paying a premium for it, entering longer-term power purchase agreements, or PPAs, to reduce volatility. Because reactors have such high upfront costs, however, they must be operating nearly 24/7 to break even. This might be hard to do profitably in a competitive electricity market, but being always-on is a selling point for many PPA customers. As price volatility, clean energy mandates, and rolling blackouts increase—as well as an uptick in power-intensive data center workloads like training AI models—this use case becomes increasingly important.

A new generation of reactors present strong promise, many starting small and refining designs with unit prices that utilities can stomach. They are also, by design, standardized, making learning curves readily achievable—and it seems like data centers and utilities are interested. Ultimately, the companies that are able to receive approval, convince customers to make orders, and deploy those reactors on time and on budget will be able to secure energy market share, regardless of reactor design.

We need to be mass manufacturing nuclear power plants on the scale of hundreds of gigawatts. The best time to build a vast, civil nuclear fleet was 50 years ago. The second best time is now.

“Andreessen Horowitz is a private American venture capital firm, founded in 2009 by Marc Andreessen and Ben Horowitz. The company is headquartered in Menlo Park, California. As of April 2023, Andreessen Horowitz ranks first on the list of venture capital firms by AUM.”

Please visit the firm link to site