Few people love the software they use to get things done. And it’s no surprise why. Whether it’s a slide deck builder, a video editor, or a photo enhancer, today’s work tools were conceived decades ago — and it shows! Even best-in-class products often feel either too inflexible and unsophisticated to do real work, or have steep, inaccessible learning curves (we’re looking at you, Adobe InDesign).

Generative AI offers founders an opportunity to completely reinvent workflows — and will spawn a new cohort of companies that are not just AI-augmented, but fully AI-native. These companies will start from scratch with the technology we have now, and build new products around the generation, editing, and composition capabilities that are uniquely possible due to AI.

On the most surface level, we believe AI will help users do their existing work more efficiently. AI-native platforms will “up level” user interactions with software, allowing them to delegate lower skill tasks to an AI assistant and spend their time on higher-level thinking. This applies not only to traditional office workers, but to small business owners, freelancers, creators, and artists — who arguably have even more complex demands on their time.

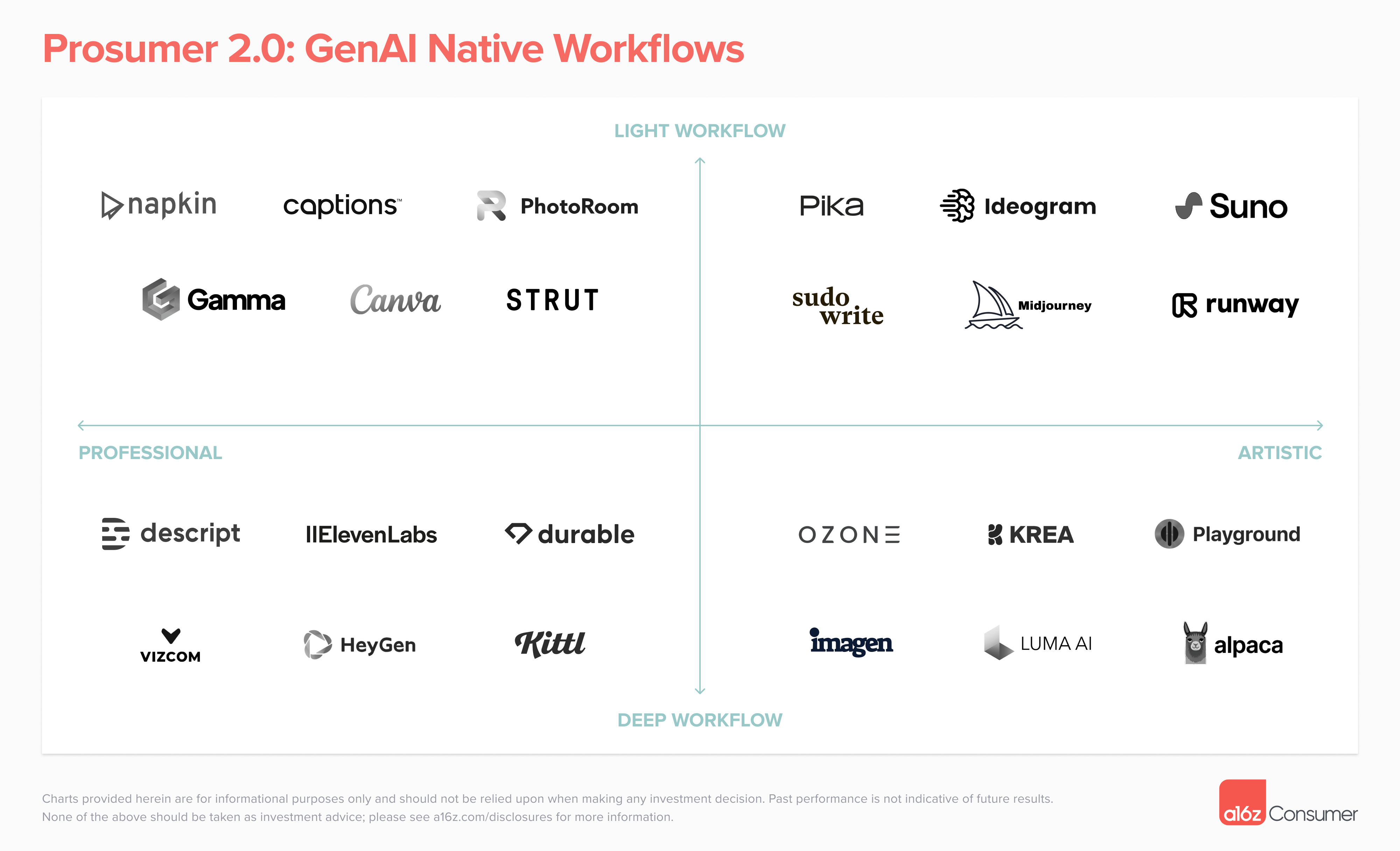

But AI will also help users unlock completely new skill sets, on both a technical and an aesthetic level. We’ve already seen this with products like Midjourney and ChatGPT’s Code Interpreter. Everyone can now be a programmer, a producer, a designer, or a musician, shrinking the gap between creativity and craft. With access to professional-grade yet consumer-friendly products with AI-powered workflows, everyone can be a part of a new generation of “prosumers.”

In this piece, we aim to highlight the features of today’s — and tomorrow’s — most successful Gen AI-native workflows, as well as hypothesize about how we see these products evolving.

What Will GenAI Native Prosumer Products Look Like?

All products with Gen AI-native workflows will share one crucial trait: translating cutting-edge models into an accessible, effective UI.

Users of workflow tools typically don’t care what infrastructure is behind a product; they care about how it helps them! While the technological leaps we’ve made with Generative AI are amazing, successful products will importantly still start from a deep understanding of the user and their pain points. What can be abstracted away with AI? Where are the key “decision points” that need approval, if any? And where are the highest points of leverage?

There are a few key features we believe products in this category will have:

- Generation tools that kill the “blank page” problem. The earliest and most obvious consumer AI use cases have come from translating a natural language prompt into a media output — e.g., image, video, and text generators. The same will be true in prosumer. These tools might help transform true “blank pages” (e.g., a text prompt to slide deck), or take incremental assets (e.g., a sketch or an outline) and turn them into a more fleshed-out product.

Some companies will do this via a proprietary model, while others may mix or stitch together multiple models (open source, proprietary, or via API) behind the scenes. One example here is Vizcom’s rendering tool. Users can input a text prompt, sketch, or 3D model, and instantly get a photorealistic rendering to further iterate on.

Another example is Durable’s website builder product, which the company says has been used to generate more than 6 million sites so far. Users input their company name, segment, and location, and Durable will spit out a site for them to customize. As LLMs get more powerful, we expect to see products like Durable pull real information about your business from elsewhere on the internet and social media — the history, team, reviews, logos, etc. — and generate an even more sophisticated output from just one generation.

- Multimodal (and multimedia!) combinations. Many creative projects require more than one type of content. For example, you may want to combine an image with text, music with video, or an animation with a voiceover. As of now, there isn’t one model that can generate all of these asset types. This creates an opportunity for workflow products which allow users to generate, refine, and stitch different content types in one place.

HeyGen’s avatar product is one example of such a tool. The company merges its own avatar and lip-dubbing models with ElevenLabs’ text-to-speech API to create realistic, talking video avatars (check out Justine’s here). HeyGen’s product also has templates and a Canva-esque editor to put avatars within a deck or video, create slides, or add text or other assets, eliminating the need to take your avatar to Powerpoint or Google Slides.

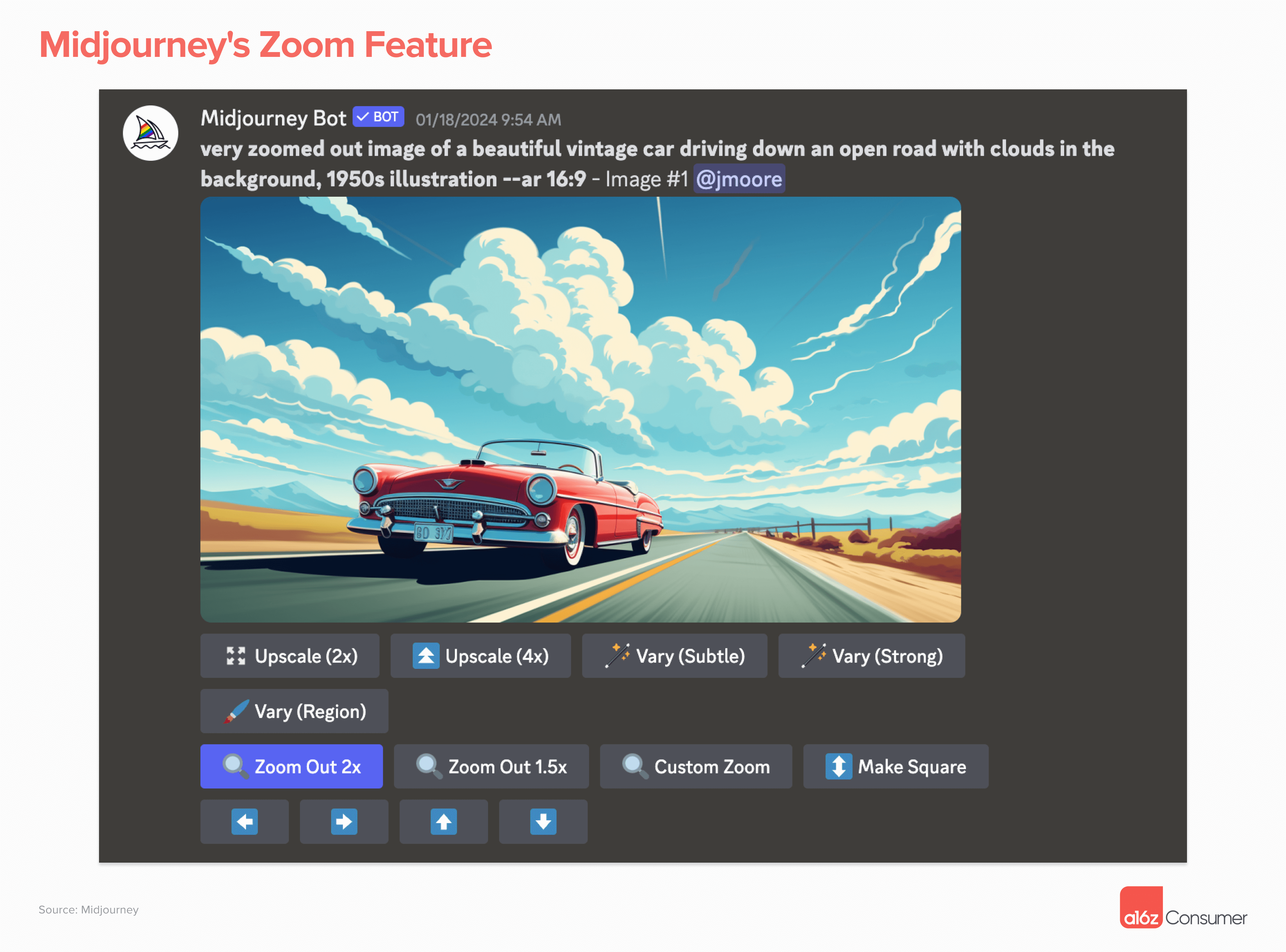

- Intelligent editors that enable more iteration. Almost no work product is “one shot” — especially with AI, when there’s inherent randomness in every generation. It’s rare that you get exactly what you want on the first run. Hitting the re-generate button and/or revising your prompt is a critical, but time-consuming and frustrating, part of the process.

The first wave of AI generation products didn’t allow for any iteration: you created an image (or video, or music), and that was it. If you re-ran the same prompt, you’d get completely different results. We’re now starting to see features that enable users to take an existing output and refine it without completely starting from scratch. Midjourney’s variation and zoom tools are a good example of this.

In video, Pika provides similar functionality. Users can take a clip they’ve already created and modify a specific region — for example, changing the gender or hair color of a character or adding or removing an object. Users can also expand the canvas by inpainting in blank space around the existing video.

- In-platform refinement. Another crucial (and related) element to intelligent editing is refinement; the final 10% of polishing work is often the difference between creating something good, versus something great. But it can be a challenge to: (1) figure out what needs refining; and (2) make these refinements without needing to move into other products.

AI workflow products can help users identify what can be improved, and then automatically make these improvements. Think of this like Apple’s “auto-retouch” feature on photos, but as “auto-retouch” for anything! The most literal interpretation of this is upscaling, which platforms like Krea provide. Within one interface, users can generate an image or design and then enhance it — getting them much closer to a final product.

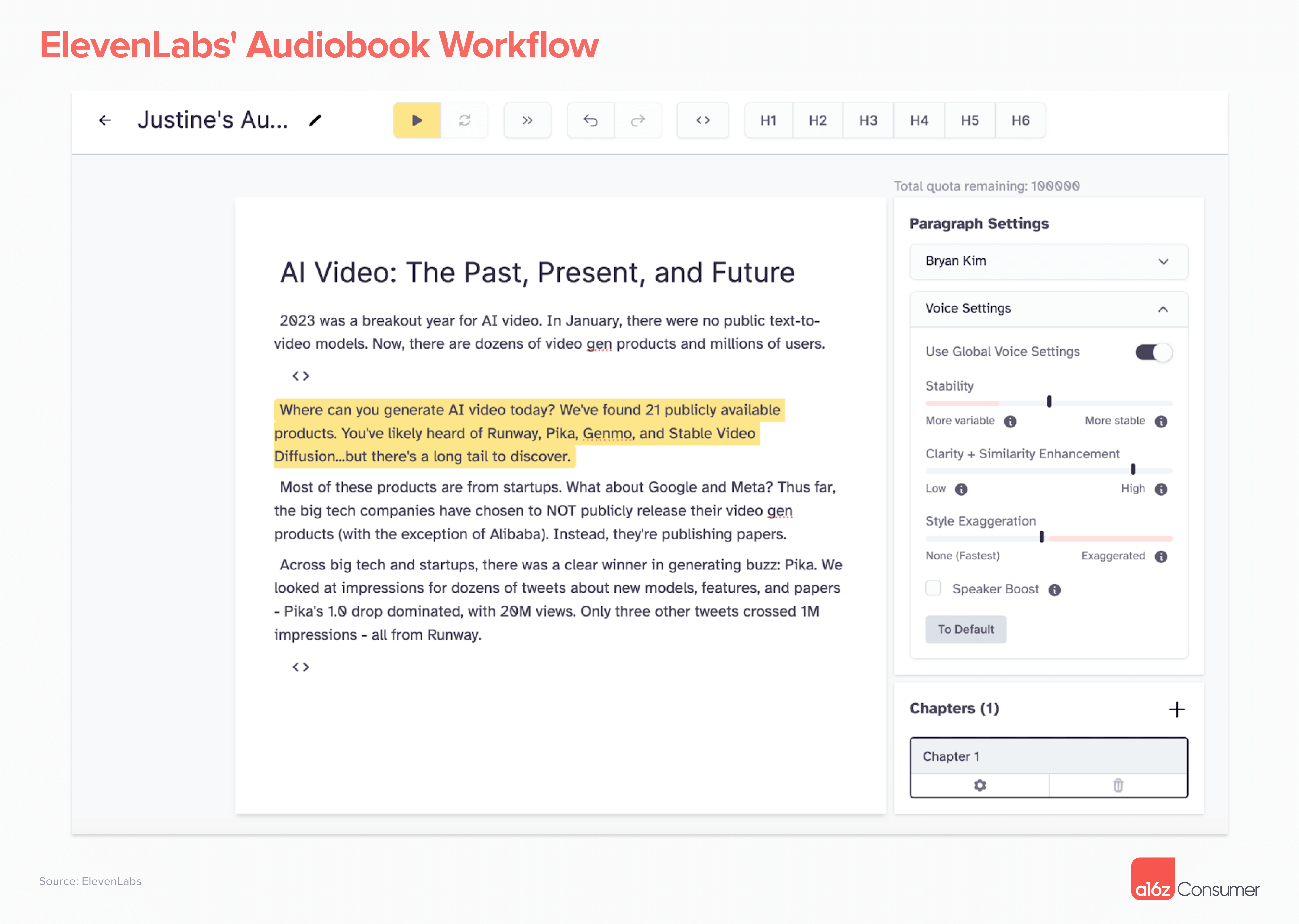

ElevenLabs’ audiobook workflow is another good example. You can use the tool to generate voices for specific characters to narrate sections of a book, and then, refine the output to perfection by adjusting pauses, stability, or clarity of a sentence or phrase.

- Output that is remixable and transposable. AI makes content uniquely flexible — every piece of content is a potential “jumping off” point for another iteration. If you’ve ever copied and tweaked a prompt from someone else’s generation in Midjourney or ChatGPT, you’ve participated in this.

Platforms that play into this flexibility may build stronger and stickier products. For the initial creator, there is huge value in being able to transform your work across mediums, e.g., turning a video into a blog post, or a text explainer into a how-to animated video. This is a core feature of Gamma’s publishing platform. Users can generate a deck, document, or webpage from a prompt or uploaded file, and switch formats if needed.

From an external-facing perspective, these products can allow users to expose their workflow for others to iterate on. This might be a series of prompts or a combination of models — or simply a “copy” button for less technical users to mimic an output or aesthetic.

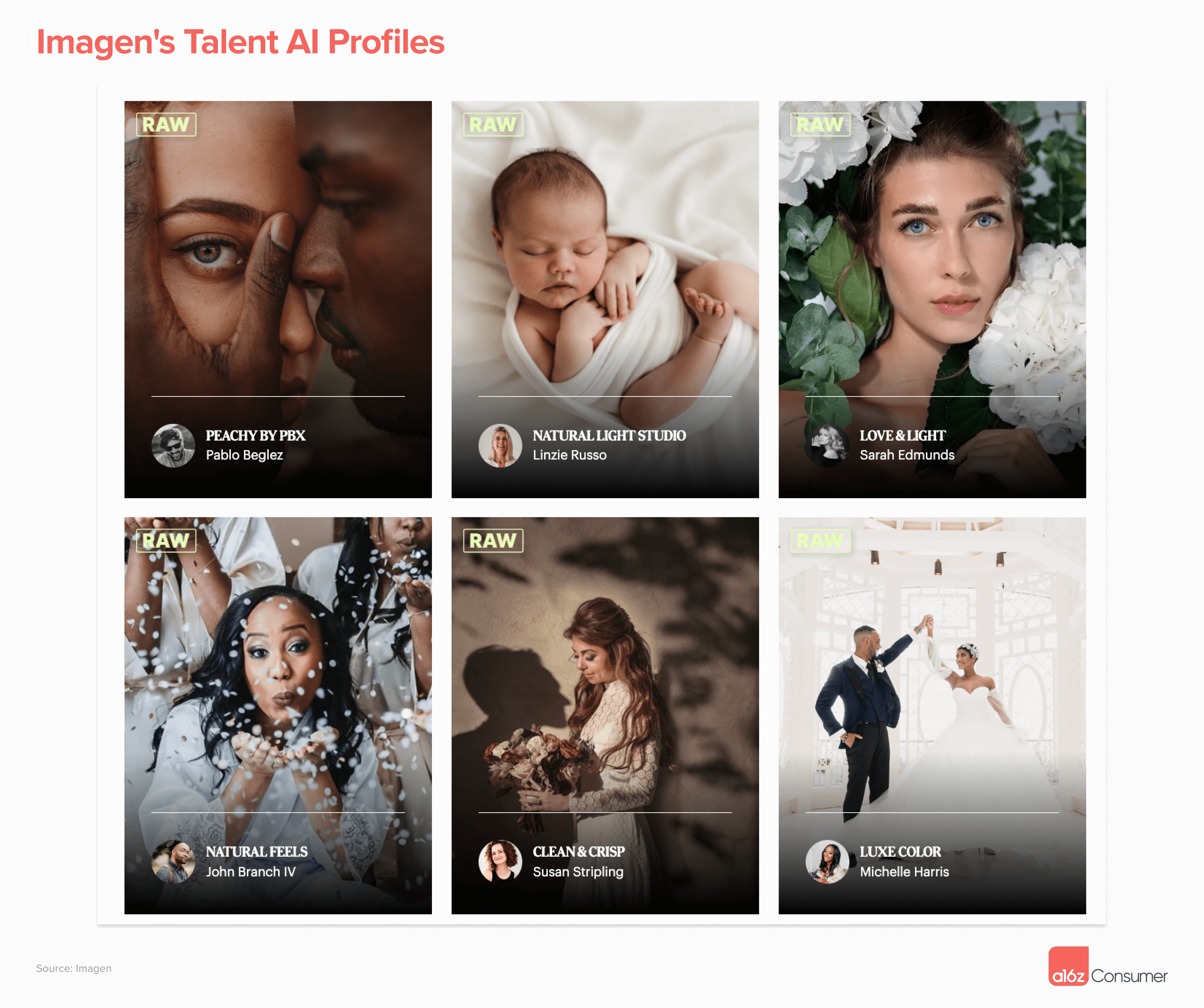

Imagen AI, an editing platform for professional photographers, is one example of this. The company trains a model on each photographer’s individual style, allowing them to batch edit more easily. However, users can also choose to edit in the style of industry-leading photographers who have made their profiles available on the platform.

How Will Prosumer Products Evolve?

It’s still early days for the next generation of prosumer tools. While existing tools’ abilities to generate core assets are finally strong enough to add meaningful workflows, most products are still only focused on one type of content — and are fairly limited in terms of functionality. Here are a few things we’re hoping to see in the coming months:

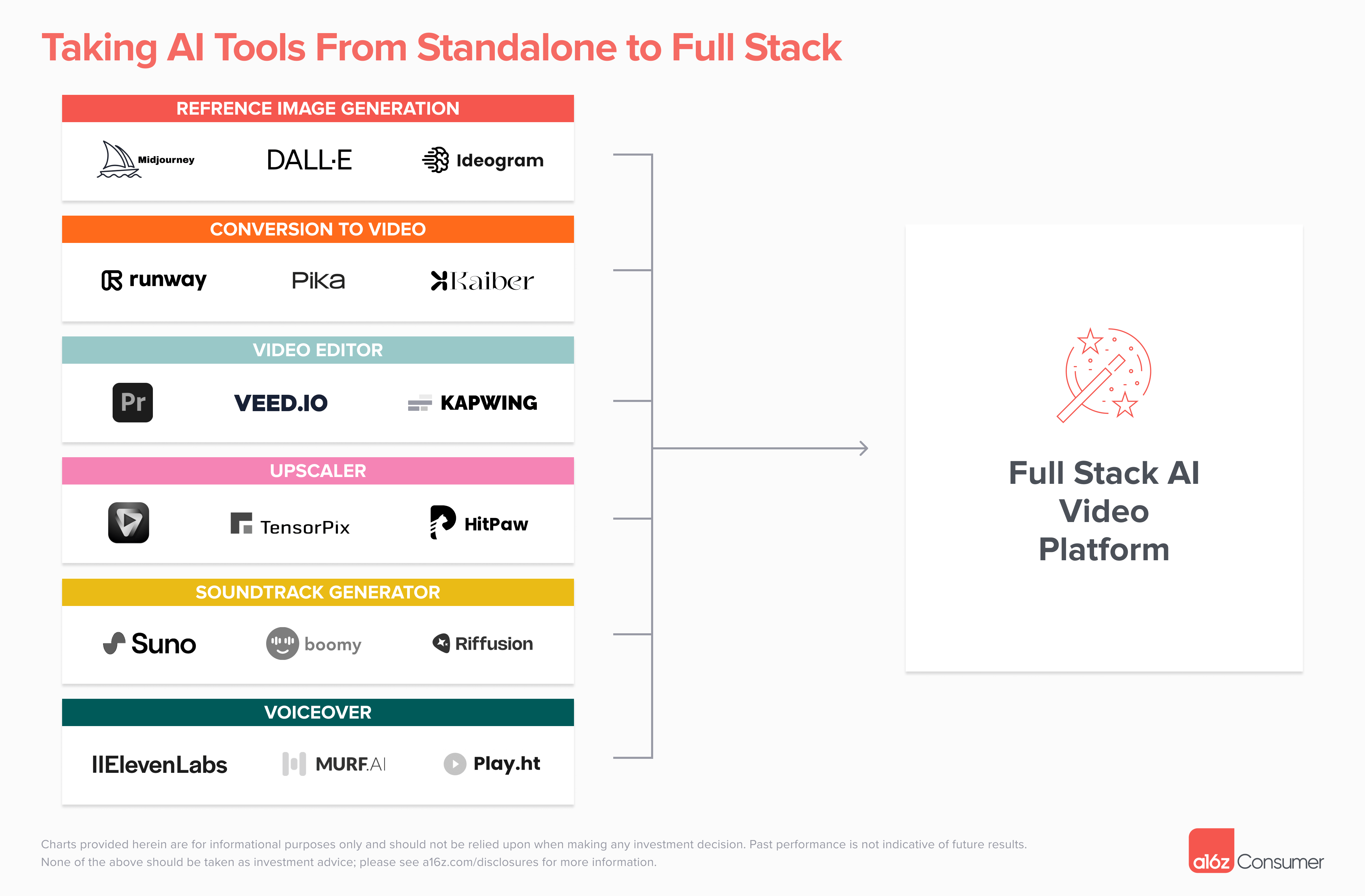

1. Editing tools that combine content modalities. Video might be the best example of this. Today, creating a short film with AI requires generating multiple clips in a product like Pika or Runway, and then moving them to another platform like Capcut or Kapwing for editing or sound mixing (or adding a voice that was generated elsewhere!).

What if you could do every step of this process in one platform? We expect that some of the emerging generation products will be able to both add more workflow features, and expand into other types of content generation — this could be done through training their own models, leveraging open source models, or partnering with other players. We may also see a new standalone, AI-native editor emerge that enables users to “plug in” different models.

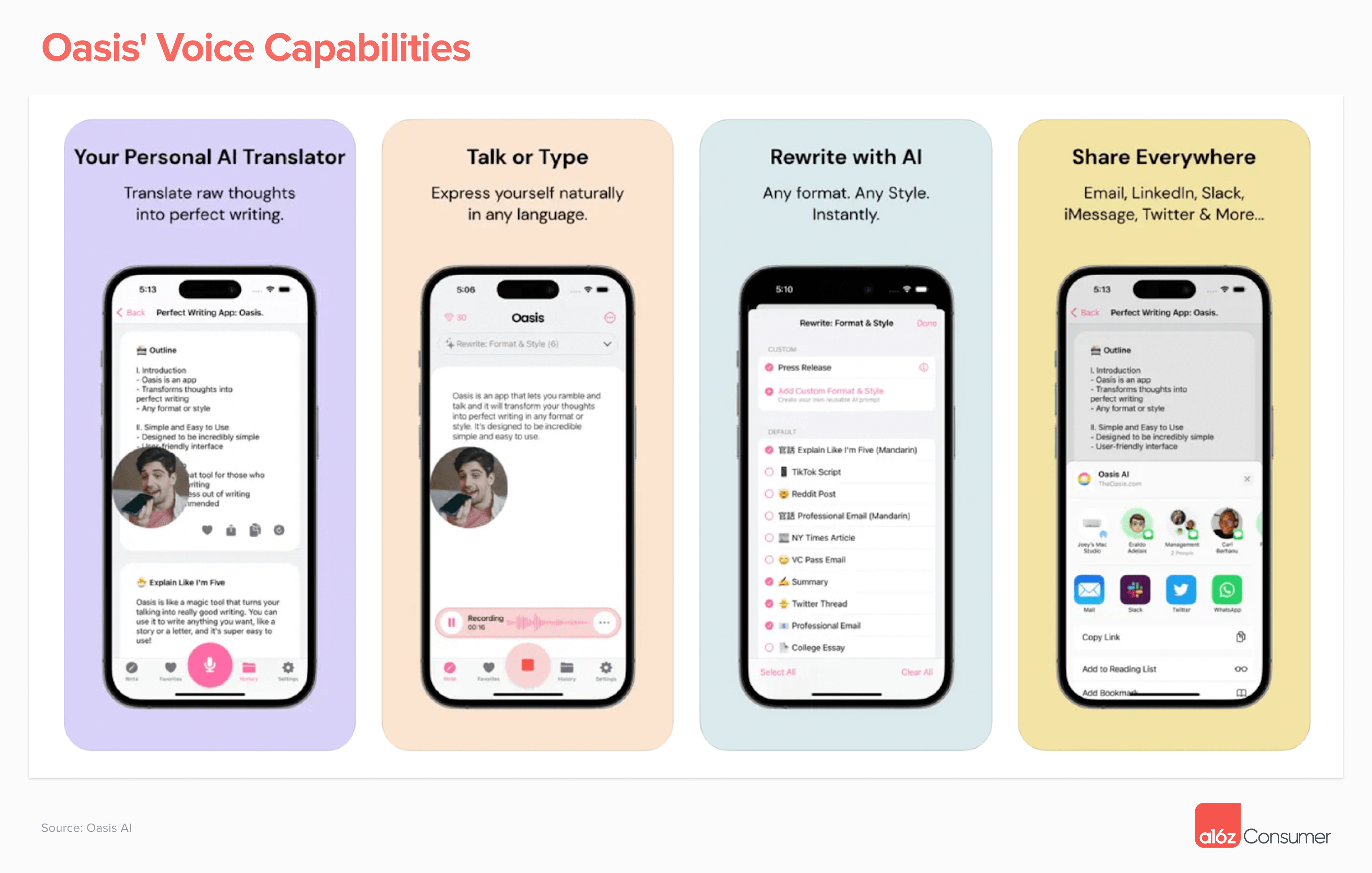

2. Products that utilize different modes of interaction. Text prompts aren’t always the most effective way to communicate with AI products. We believe that you should be able to work with generative tools like you’d collaborate with a human brainstorming partner — whether that’s via speech, sketches, or sharing inspo photos.

We’re particularly excited about voice as a modality that allows users to share more sophisticated and complex thoughts (or simply ramble in a way that’s not possible with text). Such products have already started to emerge, with Oasis, TalkNotes, and AudioPen all able to transform voice notes into emails, blog posts, or tweets. We expect audio and even video as an input source to arrive in many more workflow products, transforming how and when users can get work done.

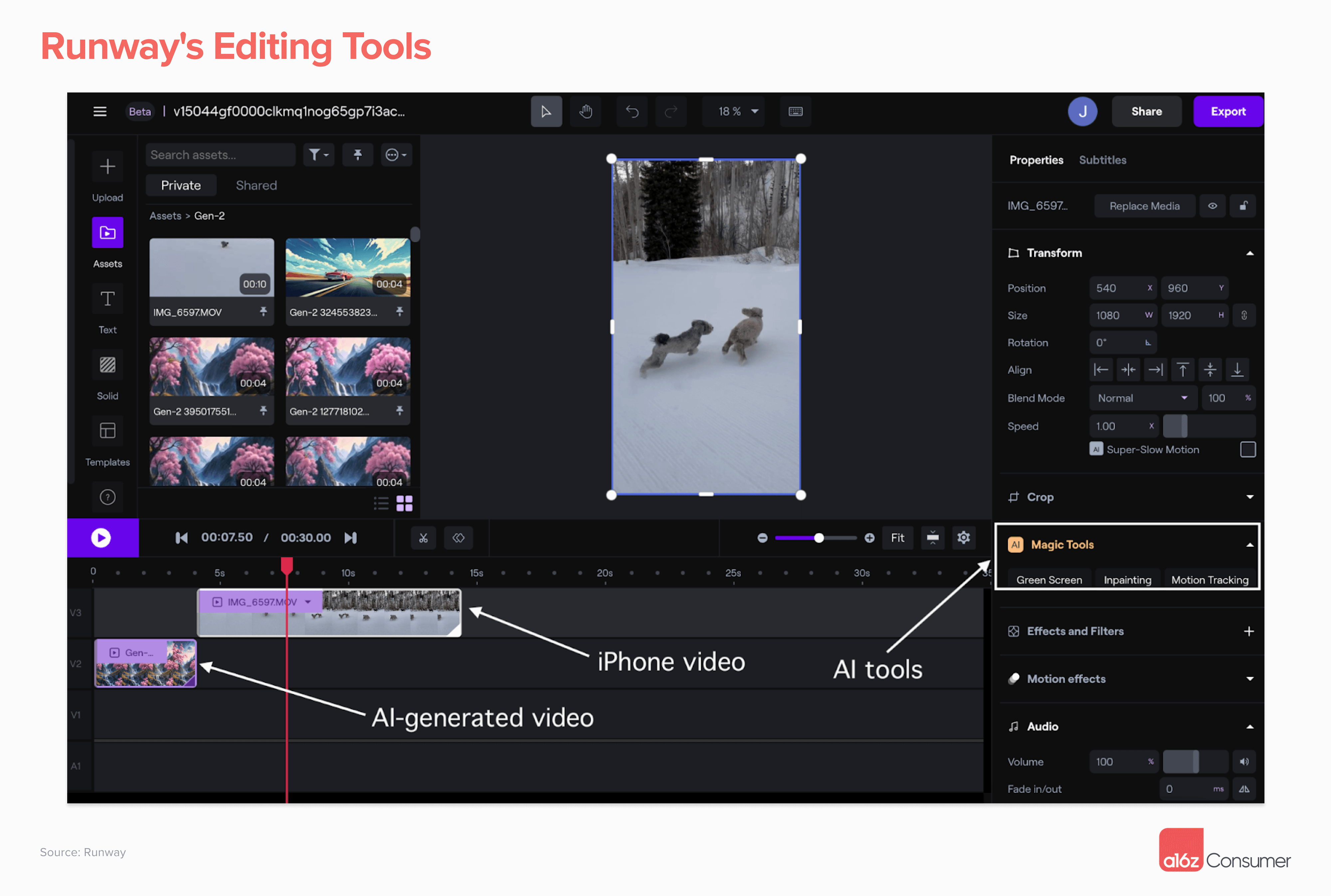

3. Products that treat human and AI-generated content as equal citizens. We’d love to see tools that enable you to work with AI and human content side-by-side. Most products today are focused on one or the other. For example, they’re great at enhancing real photos, but do nothing to AI images. Or they can generate a new video, but can’t enhance or restyle a clip from your iPhone.

In the future, we expect that most professional content producers will work with a mix of AI and human-generated content. The products they use should welcome both types of content, and even make it easier to combine them. Runway’s editing tools exemplify this. You can pull in clips and images from the company’s generative models and upload real assets to use in the same timeline. You can then use the company’s “magic tools,” such as inpainting and green screen, on both types of content.

Content workflow products, which we’ve focused on here, are just one important component of the future of prosumer software. Come back soon for our deep dive into a second key component — productivity tools — which we feel are equally ripe for reinvention in the AI era.

If you’re working on something here, reach out! We’d love to hear from you. You can find us on X at @illscience, @venturetwins, and @omooretweets, or email us at [email protected], [email protected], and [email protected].

“Andreessen Horowitz is a private American venture capital firm, founded in 2009 by Marc Andreessen and Ben Horowitz. The company is headquartered in Menlo Park, California. As of April 2023, Andreessen Horowitz ranks first on the list of venture capital firms by AUM.”

Please visit the firm link to site