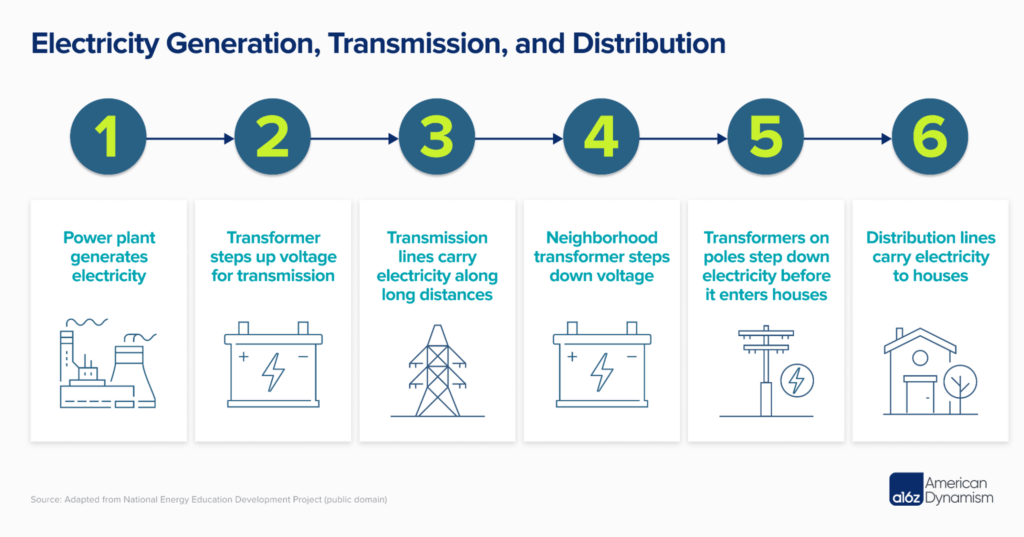

The electric grid, a vast and complex system of wires and power plants, is essential to our economy and underpins our industrial strength. Currently, we face a critical challenge: our electricity demands — expected to nearly double by 2040 due to factors like AI compute, reshoring, and “electrification” — are soaring, but our grid infrastructure and operations struggle to keep pace.

To seize a future of energy abundance, we must simplify the generation, transmission, and consumption of electricity; this entails decentralizing the grid. Big power plants and long power lines are burdensome to build, but technologies like solar, batteries, and advanced nuclear reactors present new possibilities. It will be these, and other more “local” technologies, that can circumvent costly long-haul wiring and be placed directly on-site that will help support significant load growth over the coming decades.

While historical industrial expansion relied on large, centralized power plants, the 21st century marks a shift towards decentralized and intermittent energy sources, transitioning from a “hub and spoke” model to more of a distributed network. Of course, such evolutions breed new challenges, and we need innovation to bridge the gap.

Growing pains

The United States electric grid comprises three major interconnections: East, West, and Texas, managed by 17 NERC coordinators, with ISOs (independent system operator) and RTOs (regional transmission operator) overseeing regional economics and infrastructure. However, actual power generation and delivery are handled by local utilities or cooperatives. This structure functioned in an era of low load growth, but expanding the grid’s infrastructure to meet today’s demand is becoming increasingly challenging and expensive.

Connection issues

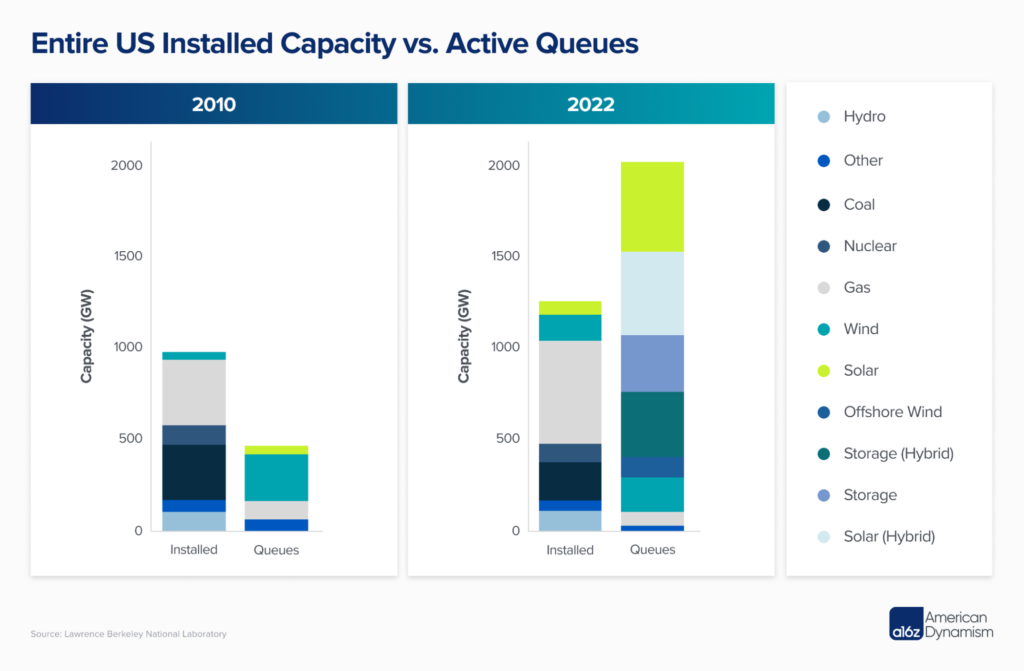

Grid operators use an interconnection queue to manage new asset connections, evaluating if the grid can support the added power at that location without imbalance, and determining the cost of necessary upgrades. Today, more than 2,000 gigawatts (GW) are waiting to connect, with over 700 GW of projects entering queues in 2022 alone. This is a lot: the entire United States electric grid only has 1,200 GW of installed generation capacity.

In reality, however, many projects withdraw after confronting the costs of grid connection. Historically, only 10-20% of queued projects have materialized, often taking over 5 years post-application to finally connect — and those timelines are only lengthening. Developers frequently submit multiple speculative proposals to identify the cheapest interconnection point, then withdraw unfavorable ones after costs are known, complicating feasibility studies. Because of this surge in applications, CAISO, California’s grid operator, was forced to stop accepting any new requests in 2022, and plans to do so again in 2024.

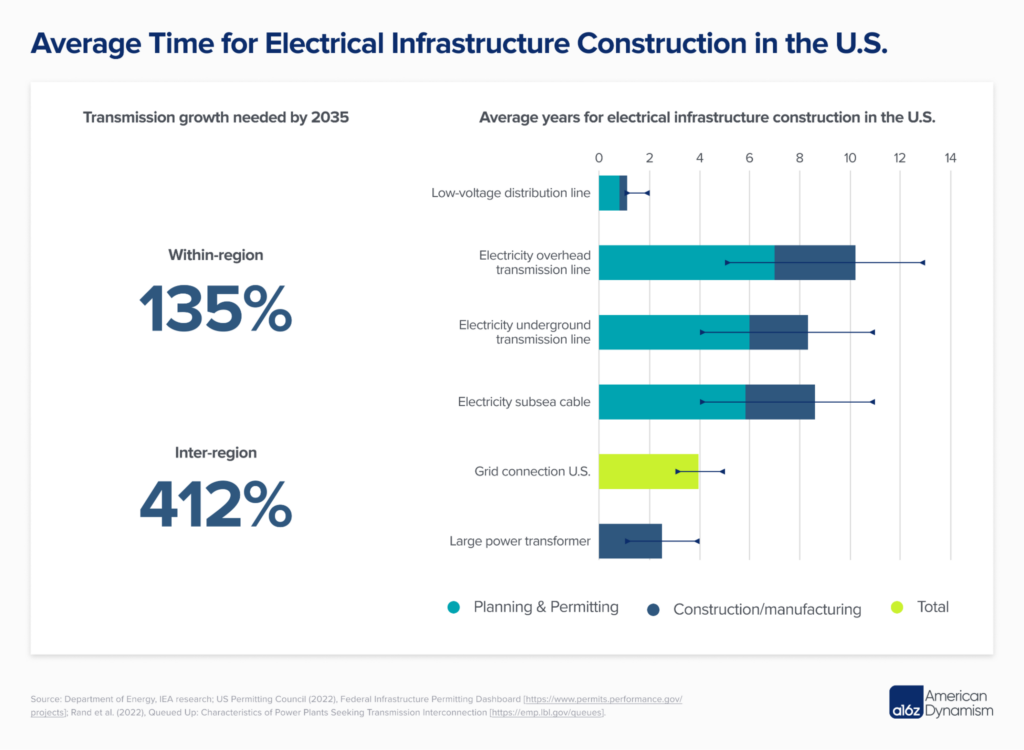

This is a critical ratelimiter and cost driver in our energy transition. A recent report by the Department of Energy found that to meet high-load growth by 2035, within-region transmission to integrate new assets must increase by 128% and inter-region transmission by 412%. Even far more optimistic projections estimate 64% and 34% growth, respectively.

There are proposed reforms to help alleviate this development backlog. The Federal Energy Regulatory Commission (FERC) is pushing a “first ready, first serve” policy, adding fees to filter proposals and speed up reviews. The Electric Reliability Council of Texas (ERCOT) utilizes a “connect and manage” method that allows for quicker connections, but disconnects projects if they threaten grid reliability – this has been remarkably successful in quickly adding new grid assets. While these policies mark progress, streamlining other regulations, such as NEPA, is also crucial to expedite buildout.

But even if approved, grid construction still faces supply chain hurdles, including lead times of more than 12 months and a 400% price surge for large-power transformers, compounded by a shortage of specialized steel. Achieving a federal goal to grow transformer manufacturing hinges on also supporting the electric steel industry, especially with upcoming 2027 efficiency standards. All of this comes at a time when grid outages (largely weather-related) are at a 20-year high, necessitating replacement hardware.

Delivery not included

Ultimately, cost overhauls in building grid infrastructure manifest themselves in higher prices for consumers. The “retail price” that consumers pay is a combination of wholesale prices (generation costs) and delivery fees (the cost of the infrastructure needed to move that electricity to you). Critically, while the price to generate electricity has declined with cheap renewables and natural gas, the price to deliver it has increased by a far greater amount.

There are many reasons for this. Utilities use distribution charges to offset losses from customer-generated power, aiming to secure revenue from fixed-return infrastructure investments (similar to cost-plus defense contracting). Renewable energy development requires extending power lines to remote areas, and these lines are used less due to intermittency. Additionally, infrastructure designed for peak demand becomes inefficient and costly as load becomes more volatile with greater electrification and self-generation.

Policy and market adjustments are responding to these rising delivery costs, with California’s high adoption of distributed power systems, such as rooftop solar, serving as a notable example.

California’s Net Energy Metering (NEM) program initially let homeowners sell surplus solar power back to the grid at retail prices, ignoring the costs to utilities for power distribution. Recent changes now essentially buy back electricity at variable wholesale rates, reducing earnings for solar panel owners during peak generation times, which often coincide with the lowest electricity prices. This adjustment lengthens the payback period for solar installations, pushing homeowners and businesses to invest in storage to sell energy at more profitable times.

California utilities also proposed a billing model where fixed charges depend on income level and usage charges depend on consumption. This aimed to make wealthier customers cover more of the grid infrastructure costs, protecting lower-income individuals from rising retail power prices. And although this specific policy was recently shelved for a similar, but less extreme, version, ideas like this might lead affluent users to disconnect from the grid entirely. Defection could lead to higher costs for remaining users and trigger a “death spiral.” Some argue this is already happening in Hawaii’s electricity market and in areas rapidly switching to electric heat pumps.

Keeping the lights on

Electricity is not magic; grid operations are complicated. At all times, electricity generated must match electricity demand, or “load”; this is what people mean when they say “balance the grid.” At a high level, grid stability relies on maintaining a constant frequency — 60 Hz in the United States.

Congestion from exceeding power line capacities leads to curtailments (dumping electricity) and local price disparities. Any frequency deviations can also cause equipment damages to generators and motors. Wind, solar, and batteries — inverted-based resources lacking inertia — also complicate frequency stabilization as they proliferate. In extreme cases, deviations may provoke blackouts or even destroy grid-connected equipment.

Because of the grid’s inherent fragility, careful consideration must be made to assets connected to it, aligning reliable supply with forecasted demand. The growth of intermittent power sources (unreliable supply) coinciding with the rise of “electrification” (spiky demand) is causing serious challenges.

When is enough, enough?

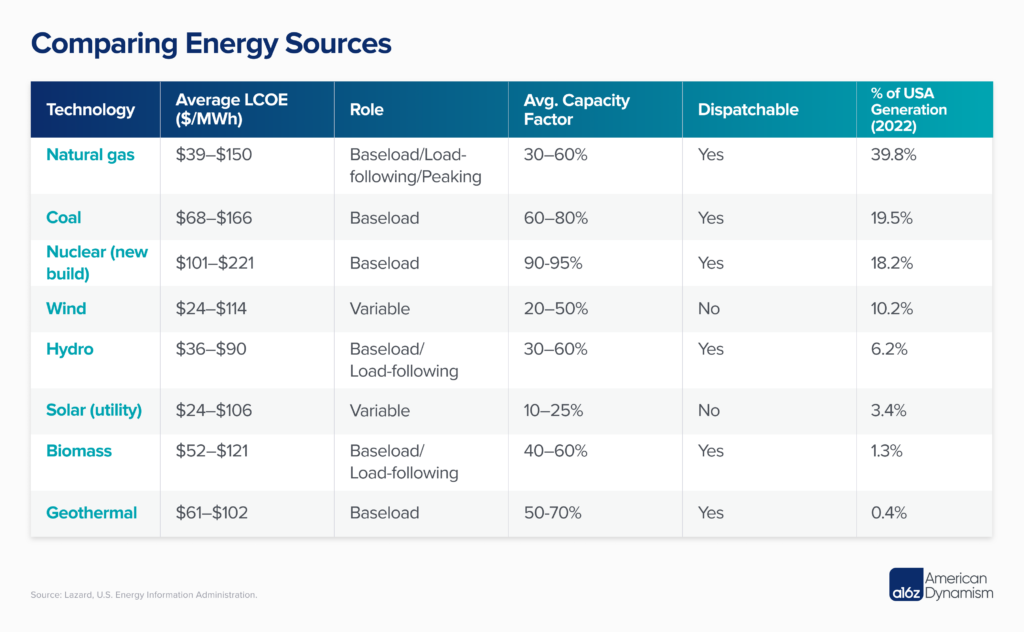

Around two-thirds of load is balanced by wholesale markets through (mostly) day-ahead auctions, where prices are determined by the cost of the last unit of power needed. Renewables, with no marginal cost, typically outbid others when active, leading to price volatility — extreme lows when renewables meet demand and spikes when costlier sources are needed (note: bid price is different from levelized cost of energy (LCOE).)

The unpredictability of solar and wind, alongside the shutdown of aging fossil fuel plants, strain grid stability. This leads to both blackouts (underproduction) and curtailment (overproduction), like California’s 2,400 GWh waste in 2022. Addressing this requires investment in energy storage and transmission improvements (discussed below).

Moreover, as power supply becomes more unpredictable, the role of natural gas grows due to its cost-effectiveness and flexibility. Natural gas often backs up renewables with “peaker plants” that activate only when needed. In general, the intermittency of solar and wind subjects natural gas plants, and other types of plants, to profit intermittently, sometimes even running continuously at a loss for technical reasons. Consequently, when “peaker plants” set wholesale prices when renewables are offline, it leads to higher costs and thus volatility for consumers.

The demand for electricity is also changing shape. Technologies like heat pumps, while energy-efficient, can cause winter load spikes when renewable output may be low. This requires grid operators to keep a buffer of power assets, and often ignore renewable sources in their resource adequacy planning. Grid operators typically adhere to a “1 in 10” rule, accepting a power shortfall once every decade, though the actual calculation is more complex. In ERCOT, which lacks a traditional capacity market in lieu of price spike incentives, we’ve already seen “emergency reserves” grow as renewable’s enter the grid.

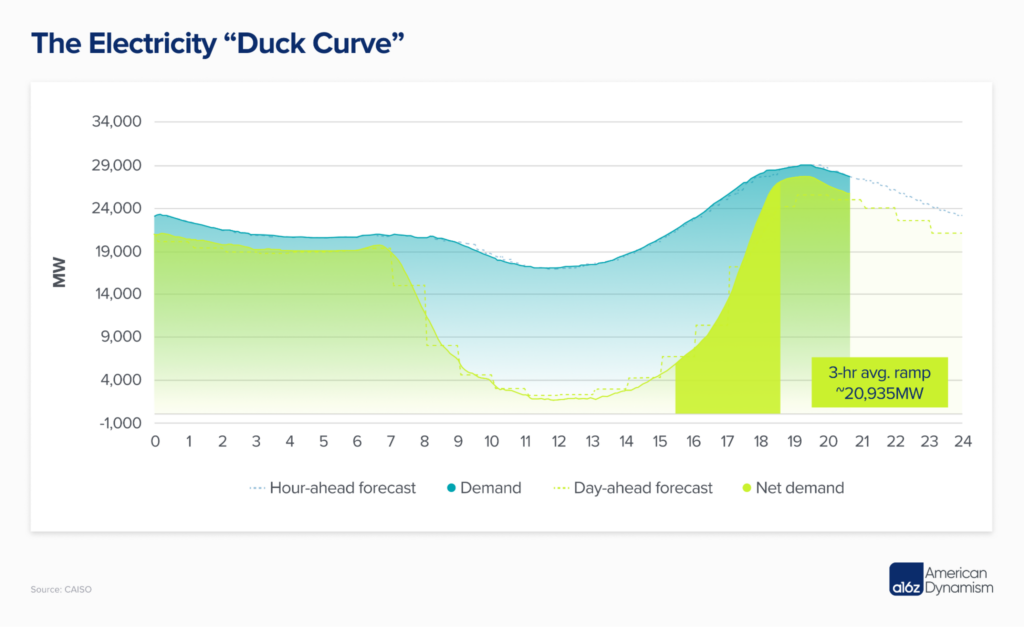

High-solar-penetration areas, like California, also face the “duck curve,” requiring grid operators to quickly ramp up over 20 GW of power as daylight fades and demand rises. This is technologically and economically challenging for plants intended for constant output.

Renewable intermittency incurs hidden costs, forcing grid operators to embrace risk or invest in new assets. While the levelized cost of energy assesses a project’s economic feasibility, it oversimplifies the asset’s true value to the broader grid. LCOE does, however, underscore the economic challenges of constructing new assets, like nuclear plants. Despite being costlier than natural gas today, nuclear offers a compelling path to decarbonizing reliable power. We just need to scale reactor buildout.

But we can’t just rely on nuclear power. Relying solely on a single energy source is risky, as shown by France’s nuclear challenges during Russian energy sanctions and the southern United States’ issues with natural gas in cold weather, not to mention commodity price swings. Regions with lots of renewables, like California, also face uncertainty due to routine reliance on imports. Even places operating at nearly 100% clean energy, like Iceland or Scandinavia, maintain reliable backups or import options during crises.

Getting smart

As electricity demand grows, the grid struggles to manage growing complexity from both decentralization and intermittent renewables. We cannot brute force this shift; if we’re going to do it, we really need to get smart.

The current grid, aging and “dumb,” depends on power plants to align production based on predicted demand, while making small, real-time adjustments to ensure stability. Originally designed for one-way flow from large power plants, the grid is challenged by the concept of multiple small sources contributing power in various directions, like your rooftop solar charging a neighbor’s electric vehicle. Moreover, the lack of real visibility into live power flow presents looming issues, specifically at the distribution level.

Residential solar, batteries, advanced nuclear, and (possibly) geothermal provide decentralized power that reduces the need for infrastructure buildout. Yet integration into an evolving, volatile grid still demands innovative solutions. Additionally, efficient use of even utility-scale power systems can also be significantly improved by local storage and demand-side responses – like turning down your thermostat when the grid is strained – that reduce the need to build underutilized assets that are online for only brief peak periods.

The “smart grid” aims to accomplish all of this and more, and can be organized into three main technology groups:

- Ahead-of-the-meter

- Dynamic line rating, solid-state transformers, voltage management and power flow systems, better conductors, infrastructure monitoring, grid-scale power generation, grid-scale storage, etc.

- Behind-the-meter

- Heat pumps, electric appliances, residential solar, home energy storage, electric vehicle chargers, smart thermostats, smart meters, microreactors and small modular reactors, microgrids, etc.

- Grid software

- Virtual power plants, better forecasting, device management, energy data infrastructure, cybersecurity, ADMS, interconnection planning, electricity financial instruments, bilateral agreement automation, etc.

Specifically, there are two broad trends that are crucial for the “smart grid” future.

First, we need to build a lot of energy storage to smooth out peak load locally and stabilize intermittent power supply across the grid. Batteries are already critical for small bursts of power, and, as prices continue to decline, even longer periods of time could be covered. But scaling hundreds of gigawatt-hours of batteries will also require expanded supply chains. Fortunately, strong economics will likely continue to accelerate deployment; entrepreneurs should seek to connect batteries anywhere they can.

Second, we need to accelerate the deployment and integration of a network of distributed energy assets. Anything that can be electrified will be electrified. Allowing these systems to interact with home and grid-scale energy systems will require a variety of new solutions. Aggregations of “smart” devices, like electric vehicles or thermostats, could even form virtual power plants that mimic the behavior of much larger energy assets.

What’s next?

A core challenge in grid expansion is carefully balancing the shift between centralized and decentralized systems, considering economic and reliability concerns. Centralized grids, while straightforward and (generally) reliable, face issues with complex demand fluctuations and high fixed costs – for example, most large nuclear plants globally are government-financed and China can build a ton of big power assets, but it does so very inefficiently. Decentralized grids, while still in early stages of deployment, are cheap but don’t automatically ensure reliable power, as preferences in some rural Indian communities indicate.

To be clear, the centralized grid we have today will certainly not disappear – in fact, it also needs to grow in size – but it will be consumed by networks of decentralized assets growing around it. Ratepayers will increasingly adopt self-generation and storage, challenging traditional electricity monopolies and prompting regulatory and market reform. This self-generation trend will reach its extreme in energy-intensive industries that especially prioritize reliability – Amazon and Microsoft are already pursuing nuclear-powered data centers, and we should do everything we can to accelerate the development and deployment of new reactors.

More broadly, ratepayers want reliable, affordable, and clean power, typically in that order. ERCOT, with its blessed geography, innovation-receptive “energy-only” markets, and relaxed interconnection policy, will be key to watch in order to see if, when, and how this is achieved with a decentralized grid. And successfully navigating this shift will, no doubt, result in significant economic growth.

Critically, to build this decentralized grid demands our most talented entrepreneurs and engineers: We need a “smart grid” with serious innovation across ahead-of-the-meter, behind-the-meter, and grid software technology. Policy and economic tailwinds will accelerate this electricity evolution, but it will fall to the private sector to ensure this decentralized grid works better than the old one.

The future of the United States’ electric grid lies in harnessing new technology and embracing free-markets to overcome our nation’s challenges, paving the way for a more efficient and dynamic energy landscape. This is one of the great undertakings of the 21st century, but we must meet the challenges.

The world is changing fast, and the electric grid must change with it. If you’re building the solutions here, get in touch.

“Andreessen Horowitz is a private American venture capital firm, founded in 2009 by Marc Andreessen and Ben Horowitz. The company is headquartered in Menlo Park, California. As of April 2023, Andreessen Horowitz ranks first on the list of venture capital firms by AUM.”

Please visit the firm link to site