In an AI-world, leaders who speak technology’s language gain an edge. But that doesn’t mean every manager needs a computer science degree.

A handle on a handful of basics goes a long way toward preparing strategic leaders for today’s reality: Almost all businesses now touch technology—some more so than others.

“Every company today needs to think of themselves as a tech company,” says Andy Wu, the Arjun and Minoo Melwani Family Associate Professor of Business Administration at Harvard Business School. “Whether you’re in retailing or you’re a law firm or a real estate company, technology is an important part of how businesses operate, and that can only increase going forward.”

“Increasingly, the tech architectures inside firms are a source of competitive advantage.”

In a new industry and background note

written with HBS research associate Matt Higgins, the authors distill the fundamentals of digital technology that can help leaders achieve their strategic priorities.

It’s vital for managers to engage with some of the basics of computer science because “they give people a framework to think about the direction that technology will evolve and the opportunities for value creation,” Wu says. Not only that, but “increasingly, the tech architectures inside firms are a source of competitive advantage.”

Wu suggests executives study up on these five computer science principles—and leave the rest to their tech teams.

1. Think in the abstract

One of the ways managers can avoid getting caught up in bits and bytes and microprocessor architectures is to embrace the concept of abstraction.

Abstraction means substituting a simplified model for the full and technically complex reality. It acts as a filter, enabling “MBAs interested in technology to skip the circuit-design courses,” Wu says.

“Generative AI chatbots like ChatGPT and the motion gestures control in the Apple Vision Pro continue to expand who can use a computer and what you can use it for.”

Wu shares how his father, who studied computer science in college several decades ago, used punch cards to instruct the computer—an early method that today’s engineers can understand thinking abstractly. The computer would convert the punch cards into a series of zeros and ones that it could understand. Over time, punch cards gave way to the command line, allowing users to give instructions in plain language—but the underlying mechanism was the same. After the command line came MS-DOS, then Windows 3.0, and so on.

“So, essentially, we added a layer of abstraction onto the punch card. Just adding these layers of abstractions completely changed who could use a computer and what you could use it for, even though the computer is intrinsically the same thing,” says Wu. “Generative AI chatbots like ChatGPT and the motion gestures control in the Apple Vision Pro continue to expand who can use a computer and what you can use it for.”

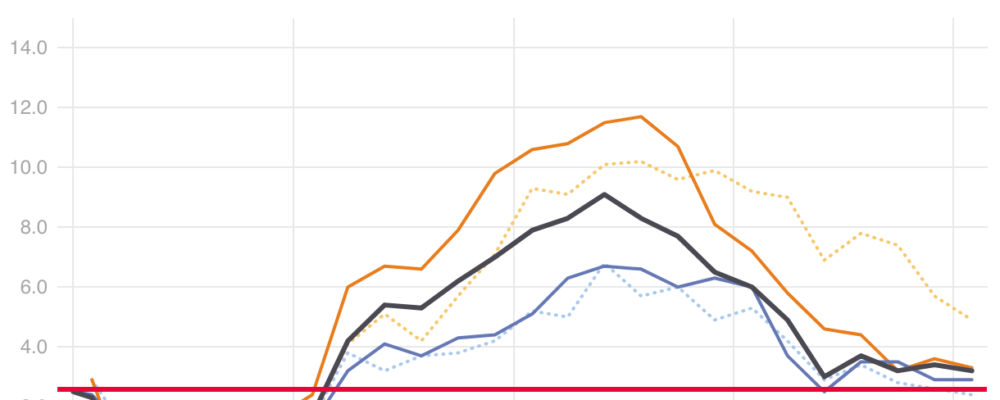

2. Platforms are power

In the beginning, Jeff Bezos built a website that sold books from its own inventory. Today, Amazon is a classic example of “platformization”: The company grew from an online bookstore into a vast technological hub that provides logistics and advertising to third-party retailers and public cloud services underlying much of the internet today. Platforms attract developers and other third parties, which in turn broaden their reach and influence.

Wu points to the Apple iPhone as another example of this phenomenon. While Steve Jobs originally intended to limit the iPhone to just Apple’s own applications, the iPhone evolved into a platform with the introduction of the App Store and its ecosystem of services. Eight of the 10 most valuable companies in the world are currently pursuing platform strategies, Wu says.

Ultimately, platform strategies lead to the greatest outcome in business, “which is what we call a winner-take-all outcome,” Wu adds. This is because network effects allow platforms to grow exponentially, attracting ever more users and partners and eventually crowding out competitors.

3. Think like a sandwich

From the computer keyboard and user interface to the smallest circuit, computer systems consist of layers. Each layer has a specific job to do in recognizing and then communicating the user’s commands. Understanding the various levels can help managers focus on the big concepts without getting mired in minutiae.

Technology systems consist of five key layers: assembly language/machine code, instruction set, operating system, middleware, and application. Each layer presents distinct business opportunities for establishing strategic differentiation.

Companies can leverage their position within layers to grow larger by pursuing strategies for integration (expanding into other areas) or foreclosure (blocking competitors from using the platform). Over time, Microsoft has pursued both integration and foreclosure strategies with its Microsoft Windows operating system, Wu says.

Companies that are new to a market don’t initially have the same market power to integrate or foreclose, so they are left with structuring opportunities around an “insert and mediate” strategy. Typically, Wu writes, insert and mediate works best when the new entrant “can offer something to both sides”—an example being Sun Microsystems’ development of Java.

4. The two layers that run the show

As in society, technology systems depend on a set of clearly defined rules to operate quickly and effectively. Two layers—the instruction set architecture and the operating system—shoulder much of the responsibility for determining both what a platform can do and how it should do it.

Most people typically relate to operating systems as a gateway that distinguishes one computer from another. In reality, the operating system is more like a chief executive in that it allocates resources and establishes and controls workflow. In the simplest sense, it tells the computer how to get the work done.

Instruction set architectures, on the other hand, are the authoritative source for what functions a computer can handle and what services it can offer to users.

The operating system and instruction set architecture are critical for leaders to know because they help determine the shape of an organization’s technology strategy, including the nature of vendor relationships and other variables.

5. Applications: Abstraction’s highest level

For end users, the application layer opens up worlds of possibilities. Applications represent the ultimate abstraction—they allow users to perform specific tasks without ever contemplating the complex interactions taking place in the actions underneath.

“Today, it is fair to say that Chrome is both: an application capable of running on most operating systems, and an operating system that runs the Chrome suite of software and extensions when it is configured with ChromeOS as the user-facing operating system.”

The concept of an application has changed dramatically over time. Initially, applications performed single tasks (such as spreadsheets) and only worked on specific machines (such as the Apple II). Today, applications are multifunctional and complex, often blurring the lines between various layers.

One example is Google Chrome. Initially considered a tool for browsing the web, Wu writes, “Today, it is fair to say that Chrome is both: an application capable of running on most operating systems, and an operating system that runs the Chrome suite of software and extensions when it is configured with ChromeOS as the user-facing operating system.”

You Might Also Like:

Feedback or ideas to share? Email the Working Knowledge team at hbswk@hbs.edu.

Image: Image created by HBSWK using asset from AdobeStock/beast01

“Harvard Business School is the graduate business school of Harvard University, a private research university in Boston, Massachusetts. It is consistently ranked among the top business schools in the world and offers a large full-time MBA program, management-related doctoral programs, and executive education programs.”

Please visit the firm link to site